Claude Opus 4.6: The Coding Teammate That Doesn’t Need Hand-Holding

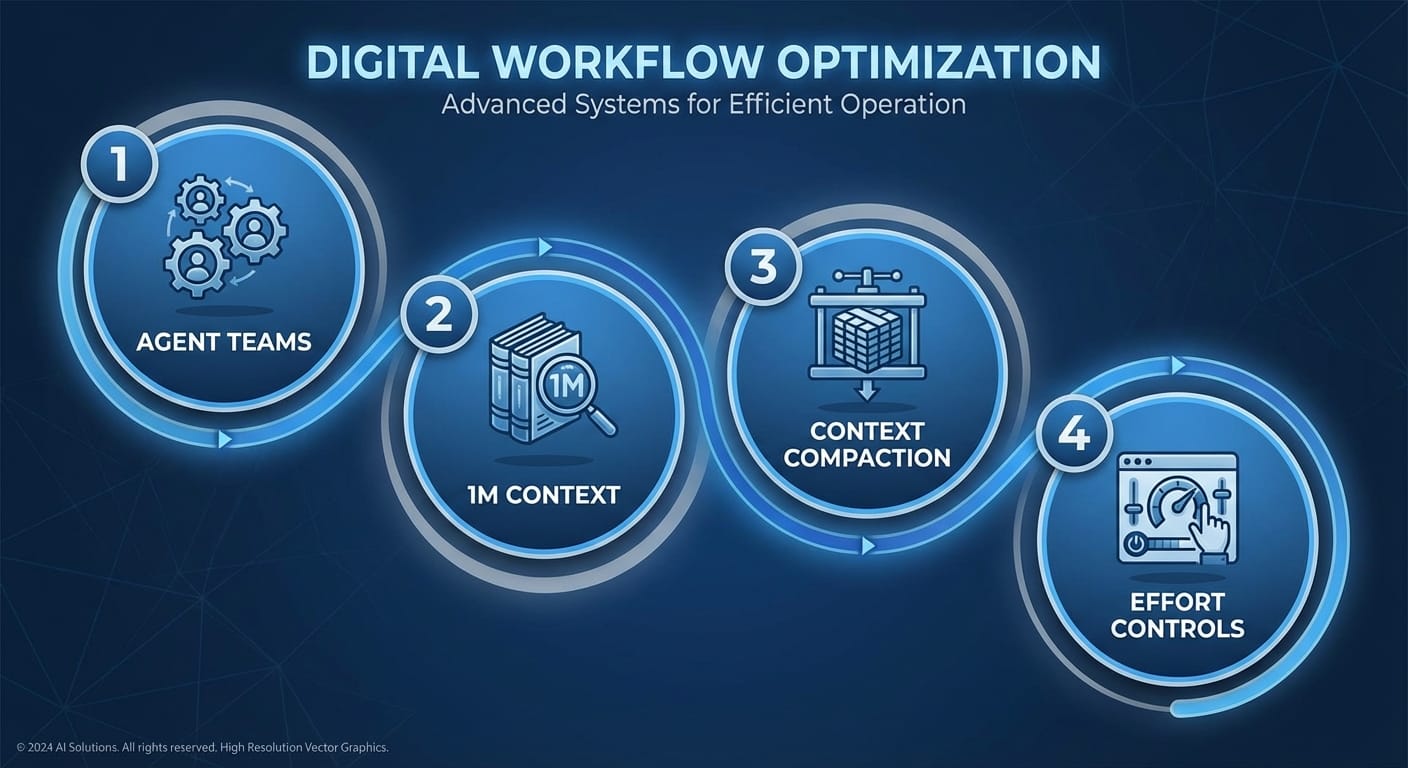

Claude Opus 4.6 adds Agent Teams, a 1M token context window, context compaction, and better planning/debugging—turning it into a coding collaborator that can handle long, real-world work without constant hand-holding.

Imagine this: you drop an entire repo into an AI, ask it to untangle a nasty bug, write tests, and open a clean PR… and it doesn’t forget what you said 20 minutes ago.

That’s the vibe with Anthropic’s Claude Opus 4.6 (released Feb 5, 2026). It’s not just “better at code.” It’s better at working like a developer—planning, reviewing, debugging, and staying useful across long sessions. And if you’ve ever watched an AI lose the thread halfway through a refactor, you know why that matters.

Problem: AI help is great… until it isn’t

Here’s what most people miss: most “AI for coding” breaks down in three predictable spots:

- Context limits: it can’t hold enough of your codebase to reason consistently.

- Long-horizon work: it’s decent at one-off snippets, but shaky at multi-step changes (refactor + tests + docs + PR notes).

- Reliability under pressure: code review and debugging require patience and precision—not just autocomplete energy.

Opus 4.6 directly targets those pain points with a handful of upgrades that, in practice, change how you can use it day-to-day.

Solution: What’s new in Opus 4.6 (and why you’ll actually care)

Let’s walk through the features that matter most if you write code for a living (or at least pretend to until CI proves otherwise).

1) Agent Teams (research preview): like having a mini-dev squad

Look, I’ll be honest… “multiple agents” sounded like marketing fluff the first time I heard it. But the idea is solid: instead of one model doing everything serially, Agent Teams can split a complex job into parallel subtasks—more like a team of specialists than one overworked generalist.

In a coding workflow, that could look like:

- Agent A: scans the repo architecture and identifies the right module

- Agent B: reproduces the bug and proposes likely root causes

- Agent C: drafts the fix and updates tests

- Coordinator: merges findings and produces a coherent plan/PR description

Anthropic also mentions you can intervene quickly (e.g., shortcuts like Shift+Up/Down) when you want to steer the team mid-flight. That’s important—because autonomy is great, but you still own the merge button. [1][3]

2) 1M token context window (beta): bring the whole codebase

This is the headliner for devs: a 1M token context window in beta. Translation: you can shove a lot more code into the prompt and keep it “in working memory.” That means fewer hacks like “here are 12 files, now ignore the rest.”

Anthropic reports 76% on long-context benchmarks for this capability—basically signaling it’s not just big, it’s usable big. [1][2][3][4]

Where this helps you immediately:

- Repo-wide refactors: rename, rewire, and update usages without missing half the callsites.

- Cross-cutting bugs: auth, caching, and async issues that live across layers.

- Consistent style: it can “see” your patterns instead of inventing new ones every file.

3) Context Compaction (beta): long sessions without hitting a wall

If you’ve ever had a great AI session, then watched it degrade because the conversation got too long… yeah. Same.

Context compaction (beta) is basically a smarter “memory compression” mechanism: it summarizes older parts of the session so you can keep going without losing the thread. That’s a huge deal for real work—debugging sessions, multi-module changes, or building a feature over a few hours. [2][3]

4) Better planning, debugging, and code review: the unsexy superpower

Here’s the thing… raw code generation is the easiest part. The real value is whether the model can:

- form a plan before changing files,

- spot edge cases,

- catch errors in logic,

- and review code like a thoughtful teammate.

Anthropic positions Opus 4.6 as stronger in agentic workflows (tool calling, multi-step work) and more reliable for planning/debugging/review. Early feedback highlights it performing better on “long-horizon tasks” where earlier models face-planted. [3][4]

5) Adaptive thinking & effort controls: spend the brainpower where it counts

Opus 4.6 adds thinking/effort controls (low/medium/high/max). Simple tasks? Keep it light and fast. Complex issues? Turn it up and let it reason more deeply. [2][3]

Practical example:

- Low: generate a DTO, write boilerplate tests, add JSDoc

- High/Max: analyze a deadlock, propose safer concurrency model, or design a migration plan

6) Computer use + tool integration: fewer copy/paste gymnastics

Anthropic also points to improved computer use, visual understanding, and tool/app navigation—meaning the model can orchestrate work across tools more smoothly (think: jumping between editor, terminal, browser docs, ticket system). For teams, there’s mention of governed agent setups through partners like Microsoft Foundry. [2]

The bottom line is: it’s aiming to be a workflow engine, not just a chat box that spits out code.

Pro Tips Box: How I’d use Opus 4.6 on a real codebase

1) Start with “read-only mode.”Ask it to map the repo: key folders, entry points, data flow, and risky areas.

2) Force a plan before edits.Prompt: “Give me a 6-step plan, then wait for my approval before changing anything.”

3) Use Agent Teams for parallel work.One agent hunts regressions, another drafts tests, another reviews for security/perf.

4) Turn effort up only when needed.Max effort for architecture or debugging; low effort for the boring stuff.

Common mistakes (aka how people waste fancy models)

- Dumping code without a goal: even with 1M tokens, you need a crisp objective (“fix failing test X”, “reduce p95 latency”).

- Skipping constraints: tell it your framework versions, lint rules, and “do not touch” modules.

- Not asking for tests: if it changes behavior, require test updates—non-negotiable.

- Letting it run wild: autonomy is great, but you still want checkpoints (“stop after analysis”, “stop after plan”).

FAQ

Is Opus 4.6 actually available for me?

Yes—Anthropic notes availability via API, Claude Code, and partners (with premium pricing beyond 200K tokens in the API context). Agent Teams are preview for subscribers. [1][2][7]

Do I need 1M tokens to benefit?

No. Even at smaller context sizes, better planning/debugging and effort controls help. The 1M window is a force multiplier when you’re dealing with sprawling repos or lots of documentation. [1][2]

What’s the single best use case?

Long, messy, real-world tasks: “trace the bug across layers, propose a fix, update tests, and produce a review-ready patch.” That’s where improved agentic workflows and compaction shine. [3][4]

Action challenge: try this on your next bug

Next time you hit a bug that spans more than one file, do this:

- Paste the relevant modules (or repo slice) and ask for a root-cause analysis.

- Make it produce a step-by-step plan before editing code.

- Require tests + a rollback plan.

If Opus 4.6 does what it’s built for, you’ll feel the difference: fewer shallow guesses, more coherent multi-step progress, and way less babysitting.

Sources

- [1] Anthropic — Release announcement/details on Opus 4.6 features (Agent Teams, availability), Feb 2026.

- [2] Anthropic — Technical/product notes on 1M context, compaction, effort controls, tool/computer use, Feb 2026.

- [3] Anthropic — Notes on agentic workflows, coding improvements, long-horizon tasks, Feb 2026.

- [4] Safety/evals & long-context performance references associated with Opus 4.6, Feb 2026.

- [7] Partner/API availability notes (API pricing tiers beyond 200K tokens), Feb 2026.