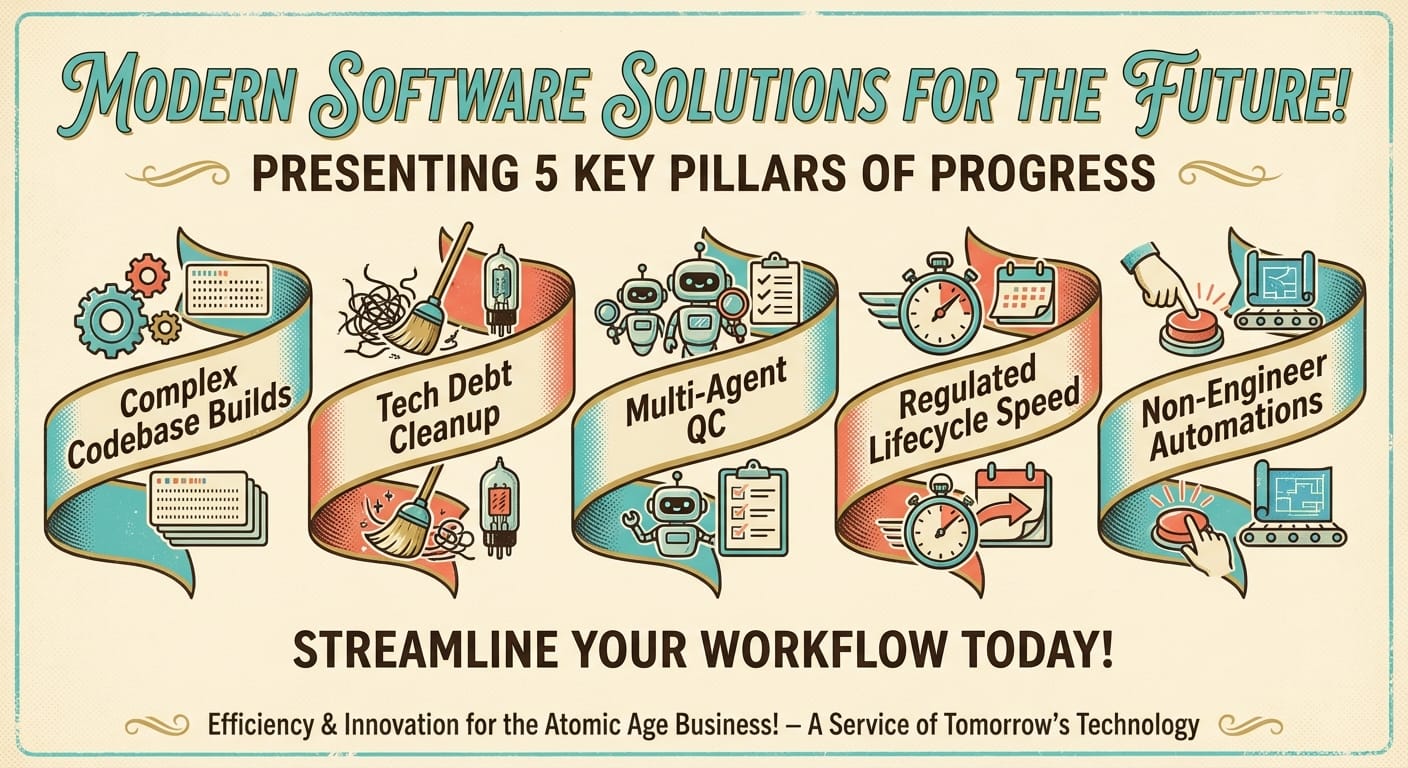

5 Claude Code Agent Use Cases That Actually Move the Needle (Not Just “Cool Demos”)

Here are 5 real-world use cases for AI agentic agents in Claude Code—where they save weeks, crush tech debt, and level up quality without chaos.

Hot take: most people talk about “AI agents” like they’re magic interns… and then they use them to rename variables and write TODO comments. Come on. If you’re going to let software run around your repo making decisions, it better be earning its keep.

So what does that look like in the real world with agentic agents inside Claude Code? Think: autonomous coding across big codebases, parallel agents doing reviews, and end-to-end workflows that don’t fall apart the second you mention compliance.

Let’s walk through five use cases I’m seeing win in 2026 deployments—plus how you can steal the playbook without lighting your codebase on fire.

1) Shipping complex implementations in massive codebases (without a multi-month death march)

If you’ve ever tried to land a non-trivial feature in a huge codebase, you know the pain: you spend 70% of your time just understanding what you’re touching. Then you break something three directories away and spend the weekend apologizing in Slack.

Claude Code agents shine when the task is:

- deeply technical,

- spread across many files,

- and needs careful validation.

A real example: an agent autonomously implemented an activation vector extraction method in vLLM—a gigantic open-source library (reported at 12.5 million lines)—in about 7 hours with 99.9% numerical accuracy. That’s the kind of task that normally turns into “let’s schedule a Q3 initiative” energy. Instead, it became a day job. Source: Anthropic Claude Code case material [1].

Practical advice: Don’t just say “implement X.” Give the agent a tight spec: expected inputs/outputs, a reference implementation (even if slow), and acceptance tests. Agents do better with guardrails—same as humans, honestly.

Case Study Snippet (realistic)

Let’s say you need to add a new caching layer to a service that’s been around since the Jurassic period (aka 2017). Your agent can:

- map the request lifecycle,

- identify where to hook cache reads/writes,

- implement the change across modules,

- and generate load-test scripts to validate impact.

You review the diff like a grown-up, run CI, and ship. No heroics required.

2) Eating technical debt and backlog tickets like it’s lunch (because it is)

Technical debt is like junk drawers. Everybody has one. Nobody wants to deal with it. And then one day you need a screwdriver and all you’ve got is three expired coupons and a mystery key.

Agentic agents are great at the “unsexy but valuable” work:

- updating deprecated APIs,

- refactoring repetitive patterns,

- adding missing tests,

- and closing backlog tickets that have been haunting your sprint board.

One enterprise reportedly used Claude-powered tools to complete what was estimated as a 4–8 month project in 2 weeks by giving agents enough context across networking, databases, and storage. That’s not a small win—that’s a staffing plan rewrite. Source: [1].

My stance: this is the highest ROI place to start. New features are sexy, but debt reduction makes every future feature cheaper.

3) Multi-agent parallel development (and “second brain” quality control)

Here’s where things get spicy. A single agent can do solid work. But a hierarchical multi-agent setup is where you start to feel like you’ve got an entire mini-org on tap.

Typical pattern I like:

- Builder agent writes the implementation

- Tester agent generates edge-case tests and fuzz cases

- Reviewer agent checks architecture consistency, readability, performance

- Security agent hunts for common vuln patterns and bad secrets hygiene

Organizations are using this approach for security reviews and architectural consistency checks on large-scale AI-generated code. And outside pure software: Fountain used Claude-orchestrated agents and reported 50% faster screening and 2× candidate conversions—a nice reminder that “agents” are a workflow concept, not just a coding trick. Sources: [1], [2].

Practical advice: don’t let agents review their own work in a single thread. Split roles. Separate contexts. You want healthy disagreement—like code review, but without anyone getting defensive.

4) End-to-end lifecycle acceleration in regulated industries (where “move fast” usually dies)

Regulated industries don’t hate speed. They hate uncontrolled speed. (Fair.) The win with Claude Code agents is you can speed up the lifecycle while keeping a paper trail: changes, tests, reasoning, approvals.

Fintech firm CRED reportedly doubled execution speed across the development lifecycle using Claude Code agents for code generation, debugging, and testing—while maintaining quality for a large user base (reported at 15 million users). Sources: [1], [4].

Real-world analogy: It’s like moving from handwritten bookkeeping to accounting software. You’re not skipping the rules—you’re automating the busywork so humans can focus on the judgment calls.

Practical advice: pair agents with your compliance workflow:

- Require test evidence in PR descriptions

- Auto-generate change logs

- Use Linear/Jira to tie commits to requirements

- Keep approvals human (seriously)

5) Letting non-engineers build agentic automations (without turning IT into a helpdesk)

This one sneaks up on people. You think Claude Code is “for devs.” Then your legal team starts shipping workflows faster than your backend team. Suddenly you’re asking, “Wait… who gave them superpowers?”

Domain experts—lawyers, ops folks, analysts—can use agent workflows to:

- triage requests,

- draft and redline documents,

- generate internal tools,

- and create repeatable processes that used to live in someone’s head.

Examples from the field: Legora’s CEO praised Claude for strong instruction-following in agent workflows, and Anthropic’s legal team cut marketing review from 2–3 days down to ~24 hours using contract redlining and triage agents. Source: [1].

My stance: this is the sleeper hit. The biggest bottlenecks in companies are often non-technical workflows glued together with meetings.

The Bottom Line (TL;DR)

Claude Code agents are best when the work is: big-context, multi-step, and measurable. If you can’t define “done,” don’t hand it to an agent (or a human, for that matter).

Common Mistakes (don’t do these)

- Letting an agent roam main: Use branches, PRs, and CI like you mean it.

- Vague prompts: “Refactor this” is how you get creative chaos. Specify outcomes and constraints.

- No evals/tests: If you can’t test it, you can’t trust it. Period.

- Single-agent monoculture: Multi-agent checks catch weirdness early—especially security/perf regressions.

Quick Wins (you can do this this week)

- Pick one nasty backlog ticket and have an agent propose a PR + tests.

- Set up a reviewer agent that only comments on architecture and naming consistency.

- Create a “definition of done” template the agent must fill out (tests, benchmarks, rollout plan).

FAQ

Do I need multi-agent setups on day one?

Nope. Start single-agent for implementation, then add a reviewer agent once you trust the workflow.

Will agents replace senior engineers?

I don’t buy it. Agents replace waiting: waiting to understand context, waiting to write boilerplate, waiting to chase down edge cases. Senior judgment still matters.

What tools does Claude Code pair well with?

GitHub (PRs/CI), Linear (task tracking), and your test runner of choice. Agents are only as useful as the rails you put them on.

All 5 Use Cases (recap)

- Complex implementations in huge codebases

- Technical debt + backlog elimination

- Multi-agent parallel dev + quality control

- Regulated lifecycle acceleration

- Non-engineer agentic automations

Sources

- Anthropic — Claude Code / agentic deployments and case studies (compiled examples including vLLM implementation, enterprise tech debt reduction, legal workflow acceleration). Provided research brief referencing Anthropic materials. https://www.anthropic.com

- Fountain — reported outcomes from Claude-orchestrated agent workflows (screening speed and conversion improvements). Provided research brief. https://www.fountain.com

- vLLM — open-source LLM inference library referenced in Claude Code implementation example. https://github.com/vllm-project/vllm

- CRED — fintech company referenced for lifecycle acceleration example (context from provided research brief). https://cred.club

Action Challenge

Pick one real task you’ve been punting—something with clear acceptance criteria—and run it through a Claude Code agent this week. Then do the adult part: review the diff, run tests, and write down what rules made it succeed. Rinse, repeat, and you’ll have an “agent playbook” faster than you think.