AI Power Crunch: Will the Grid Keep Up?

AI isn’t just eating GPUs—it’s eating megawatts. Here’s what’s really bottlenecking the grid, what fixes are plausible, and what operators can do today to avoid getting stuck in the time-to-power trap.

AI isn’t “just software” anymore. It’s a physical industry with a very real appetite for electricity — and the bill is coming due. If you’ve been treating AI like it lives in the cloud (read: “someone else’s problem”), I’ve got bad news: the cloud plugs into your grid.

And here’s my hot take: the grid can keep up… but only if we stop pretending this is a future problem. The power crunch is already here, and the winners will be the folks who plan like adults, not like hype merchants.

The problem (in plain English): AI workloads are spiky, huge, and nonstop

Let’s translate “AI power crunch” into something normal people can picture.

Training a big AI model is like moving your entire house… every day… with a fleet of semi trucks… and you can’t do it at night because you also need the trucks during the day. That’s what utilities see when a new data center shows up and says, “Hey, can I get a few hundred megawatts? Also, I need it fast. Also, uptime has to be flawless.”

Utilities aren’t lazy. The grid is just slow-moving infrastructure. New transmission lines can take close to a decade in the U.S. because of permitting and siting. The U.S. Department of Energy has been blunt about transmission delays and the need to modernize and expand the system to meet load growth and clean energy goals. (DOE National Transmission Needs Study)

Meanwhile, data centers are expanding like someone hit the “copy/paste” button. The International Energy Agency expects data centers’ electricity use to grow materially this decade, driven in large part by AI. (IEA Electricity 2024)

So… will the grid keep up?

Yes, if we treat this like the industrial expansion it is. No, if we keep doing what we’re doing: slow interconnection queues, local “not in my backyard” fights, and building generation without enough wires to move the power.

The Bottom Line

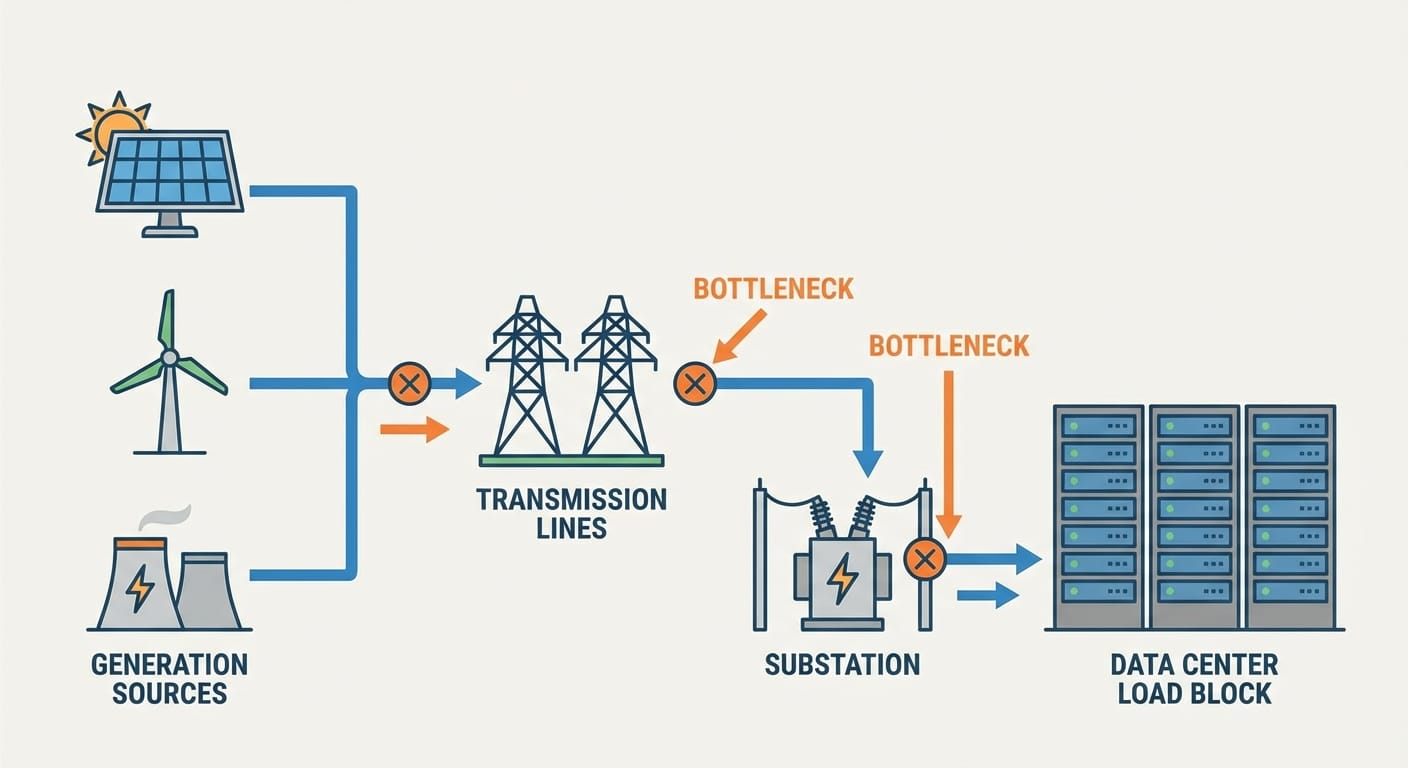

AI is forcing three upgrades at once: more power, more grid capacity, and more flexibility (because AI demand isn’t polite). If any one of those lags, you get bottlenecks, higher prices, and “sorry, can’t connect you yet” letters.

What’s actually needed (solution mode)

Here’s where I’m opinionated: the answer isn’t one magic tech. It’s a bunch of boring-but-effective moves stacked together — like financial fitness. Nobody gets rich off one weird trick; you do it by compounding good decisions.

1) Build more generation (but not just “more”)

We need new supply, period. Gas, nuclear, wind, solar — pick your mix, but the math doesn’t care about vibes. The North American Electric Reliability Corporation (NERC) has warned about reliability risk as demand rises and the resource mix shifts. (NERC Reliability Assessments)

My stance: we’re going to need firm power (resources that can run when you ask them to) alongside renewables. That can mean gas with a long-term plan to decarbonize, nuclear life extensions, geothermal, or storage paired with renewables. But pretending we can power an AI boom with only “whatever the weather gives us” is wish-casting.

2) Transmission: the unsexy hero

People love posting solar panel pics. Nobody posts transmission lines because, well, they’re not cute. But transmission is how you turn “we have power somewhere” into “you can use it here.”

DOE and FERC have both been pushing reforms and planning improvements because the old model (incremental upgrades, local planning) doesn’t match today’s load growth. (FERC Transmission)

3) Interconnection queues: fix the “DMV line” of energy

If you’ve never heard of interconnection queues, think of them like the world’s worst restaurant waitlist — except instead of brunch, it’s grid access. Generation projects can wait years to get approved and connected.

FERC has adopted reforms aimed at speeding up and improving the interconnection process (moving from first-come-first-served to “cluster studies,” penalties for delays, etc.). (FERC Interconnection Reforms)

Will it help? Yes. Will it instantly fix everything? No. But at least we’re admitting the line is too long.

4) Flexibility: make AI a better grid citizen

Here’s a fun twist: not all compute has to run at the same time. Some training jobs can be scheduled. Some inference can be moved. Some workloads can pause for 15 minutes without the world ending.

That means demand response for data centers is a real lever. Utilities already do this with industrial customers; we just haven’t made it “standard operating procedure” for AI campuses yet.

Pro Tips (from someone who’s built systems under real constraints)

- Design for throttling: If your model training can’t slow down, you’re building a grid problem.

- Negotiate interruptible load contracts: Cheaper power if you can curtail when the grid is stressed.

- Use on-site storage smartly: Batteries aren’t just backup — they’re “peak shaving” machines.

- Pick sites like a realist: Cheap land doesn’t matter if there’s no substation capacity.

Stats Spotlight: the numbers that should make you sit up

- Data centers are a fast-growing load, with AI a major driver this decade. (IEA)

- Transmission buildout is slow due to permitting, cost allocation, and planning complexity. (DOE)

- Reliability risk is rising in parts of North America as demand grows and resources change. (NERC)

Common mistakes (don’t do this)

- Assuming “the utility will figure it out”: Utilities plan years out. If you show up late, you’ll wait.

- Picking a site based on tax breaks alone: Incentives don’t magically create transformer capacity.

- Ignoring heat: Power isn’t the only constraint. Cooling water, ambient temperature, and HVAC design matter a lot.

- Overbuilding diesel backup and calling it a strategy: Generators are insurance, not a sustainable growth plan.

Case Study Snippet: the “great location” that wasn’t

Let’s say you’re building an AI training cluster. You find cheap land near a metro area and sign the lease. Then the utility says: “We can give you 20 MW now, maybe 80 MW in 4 years, and 200 MW after a new substation and transmission upgrades.”

What happens next? You either (a) downscope and lose your roadmap, (b) scramble to a second site and duplicate ops pain, or (c) spend a fortune on temporary generation and fuel logistics. I’ve watched versions of this story play out in tech over and over: constraints don’t care about your timeline.

FAQ

Is AI really a big deal for electricity, or is this hype?

It’s real. AI is a meaningful driver of incremental data center load growth, and multiple agencies and analysts are tracking it as a planning issue. (IEA)

Why can’t utilities just build faster?

Permitting, equipment lead times (like large transformers), workforce constraints, and cost allocation fights slow everything down. Transmission especially is a multi-year slog. (DOE)

Will this raise electricity prices for everyone?

In some regions, it can increase congestion and capacity needs, which can put upward pressure on costs. The impact depends on local generation, transmission, regulation, and how costs are recovered.

What’s the fastest lever we have?

Efficiency and flexibility. Better chips, better cooling, workload scheduling, and demand response can reduce peak stress faster than building a new transmission corridor.

Summary Bullets (because you’ve got stuff to do)

- AI is becoming a heavy industry, and heavy industry needs real infrastructure.

- The grid can keep up—but not with business-as-usual permitting and planning.

- Transmission and interconnection are the bottlenecks nobody wants to talk about.

- Data centers can help by being flexible loads: throttle, shift, store, and curtail strategically.

- If you’re building AI infrastructure, pick sites based on power timelines, not vibes.

Actionable takeaways: If you run compute, ask your utility about (1) available capacity today, (2) the upgrade roadmap, and (3) demand response programs. If you’re an investor or operator, bake “time-to-power” into your model like it’s rent. Because it basically is.